When there are no enough network bandwidth resources in cloud computing infrastructure, QoS (Quality of Service) mechanism is used to achieve overall QoE (Quality of Experience), i.e. guarantee some crucial applications’ performance at the price of sacrificing some less important applications’ traffic, performance, adding delays and so on.

General QoS mechanism can be applied to cloud computing in the same way as it is applied in other use cases. Those include:

- Packet classification

- Marking and queuing

- RED (Random Early Discard) queue manage algorithm

- Scheduling principle: priority based, round robin, weighted round robin

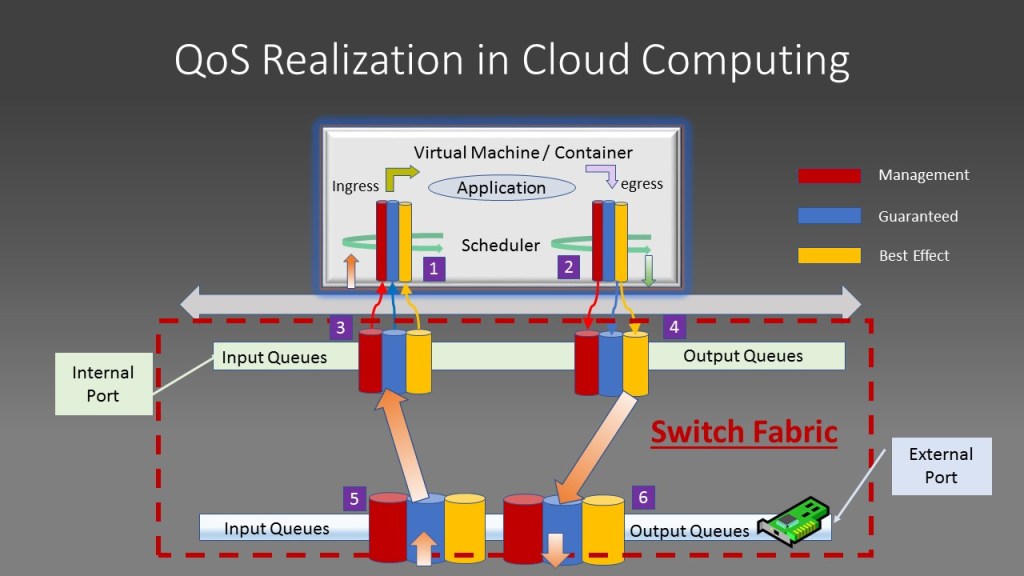

Take the scenario of deploying an application using a virtual machine in OpenStack as an example: Virtual machine hosting your application is launched on the computer node of OpenStack infrastructure. First, your virtual machine image must be able to support multiple queues in its virtual network interface on both input and output side. By this, outgoing network traffic marked by your application with different priority, through the way such as mapping packet’s DSCP field to traffic priority or for incoming traffic, which have already been marked by packets’ originating source, can be put into different queues to apply RED and scheduling principle. This can be explained by following diagram:

Here, the red queue is used to store the most critical user traffic, usually network management traffic. The blue one is used to hold guaranteed user traffic or what is called CIR (Committed Information Rate) traffic and the yellow one is used to store what is called best effort traffic which can be oversubscribed, discarded when there is not enough bandwidth to handle this portion of traffic. A packet is put into one of those three queues by looking up the marking of the incoming packet or put into a queue based on marking of outgoing traffic priority. Although more classes and queues other than three categories can be used to fine tune the networking performance, three classes of traffic and according queues are proven good enough by multiple use cases and traffic profiles.

After packets are queued accordingly, scheduler will pick up packets from different queues by way of priority based scheduling and round robin-based scheduling principle. All packets within the red queue must be served before serving any packet inside blue and yellow queues. Packets between blue and yellow queues can be served on priority based or weighted round robin based, i.e. either drain out all packets within blue one before severing any yellow one or weight based, i.e. server five packets from blue queue before serving one packet from yellow queue and so on. By using multiple queues, QoS effect is achieved and HOL (Head of Line blocking) is avoided.

The incoming and outgoing traffic from virtual machines will go through the switch fabric of the compute node in the OpenStack use case, which is normally implemented by OVS (Open Virtual Switch) or similar technologies. The switch fabric opens an internal network port for each network interface for each virtual machine attached to it. For example, port 3,4 in the above diagram. The physical network interface will also be attached to this switch fabric, for example, represented by port 4,5 in above diagram. Multiple queuing, scheduling principles will be used on all the interfaces, no matter if it is an internal port or port mapped to a physical interface card. Traffic classifying, marking, queueing, RED queue management, priority and round robin scheduling principle will be applied in the same way.

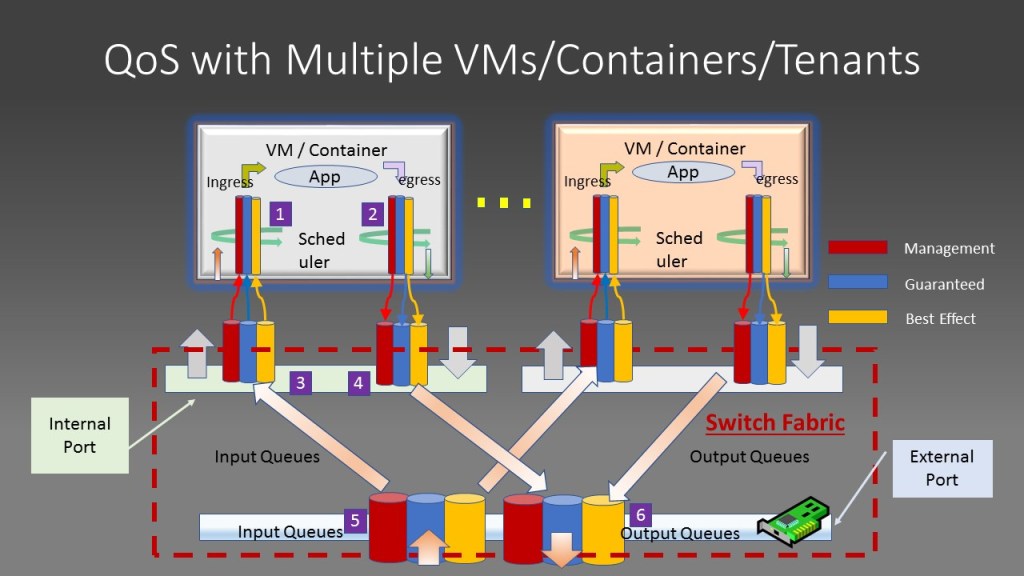

Those principles are applied in a hierarchy way: the queues of the physical interface will aggregate, queue and dequeue traffic from multiple virtual machines residing in the compute node. Same QoS principle will be applied between traffic from different virtual machine traffic sources. This is true when multiple virtual machines, multiple tenant exists within the same cluster, which is illustrated by following diagram:

Here, two virtual machines exist in the same compute node of the cluster. They can belong to the same tenant or different one. The physical port ( port 5 ,6) queues and deques traffic from different virtual machines. Some creative kind of QoS principals can be used on this aggregated interface: treat all tenant traffic equally, assign traffic weight to different tenants to mimic the service level agreement (SLA) such as platinum, gold, bronze to serve customers in differentiated ways.

Kubernetes has a similar way to enforce QoS for container based applications using traffic control features of the underlining Linux Operating System.